Since the coronavirus pandemic started, fake news, half-truths, and conspiracy theories have been booming. Even before the crisis, business and political leaders considered them to be the greatest cyber threat. Together with Fraunhofer FKIE and NewsGuard, pressrelations is working on solutions for the early detection of disinformation. In this interview, the three partners explain what exactly is behind their method and how reputation risks can be minimized in the future.

JENS – FOR ALMOST 20 YEARS NOW, PRESSRELATIONS HAS BEEN SCOURING ALL KINDS OF MEDIA ON A DAILY BASIS AND ANALYZING THE RESULTS SO THAT CUSTOMERS HAVE A SOLID BASIS FOR THEIR COMMUNICATION WORK. HOW DO YOU SEE THE DEVELOPMENT OF FAKE NEWS AND DISINFORMATION?

Jens Schmitz: Conspiracy theories and false reports are not a new phenomenon in themselves. They have always been booming, especially in times of crisis. But the Internet has fundamentally changed the media world, making it much more complex and confusing. Social media plays a decisive role in this. It has led to an immense increase in the number of cycles of distribution. Anyone can take on the role of a medium, disseminate information that does not necessarily have to meet journalistic standards, and achieve wide coverage.

In this context, the media scientist Bernhard Pörksen coined the term “indignant democracy”, in which everyone can join in, let their anger run free and spread their own “truths”. In addition, the corona pandemic currently offers an optimal breeding ground for disinformation.

HOW DO YOU DEAL WITH THIS AS A MEDIA MONITORING COMPANY?

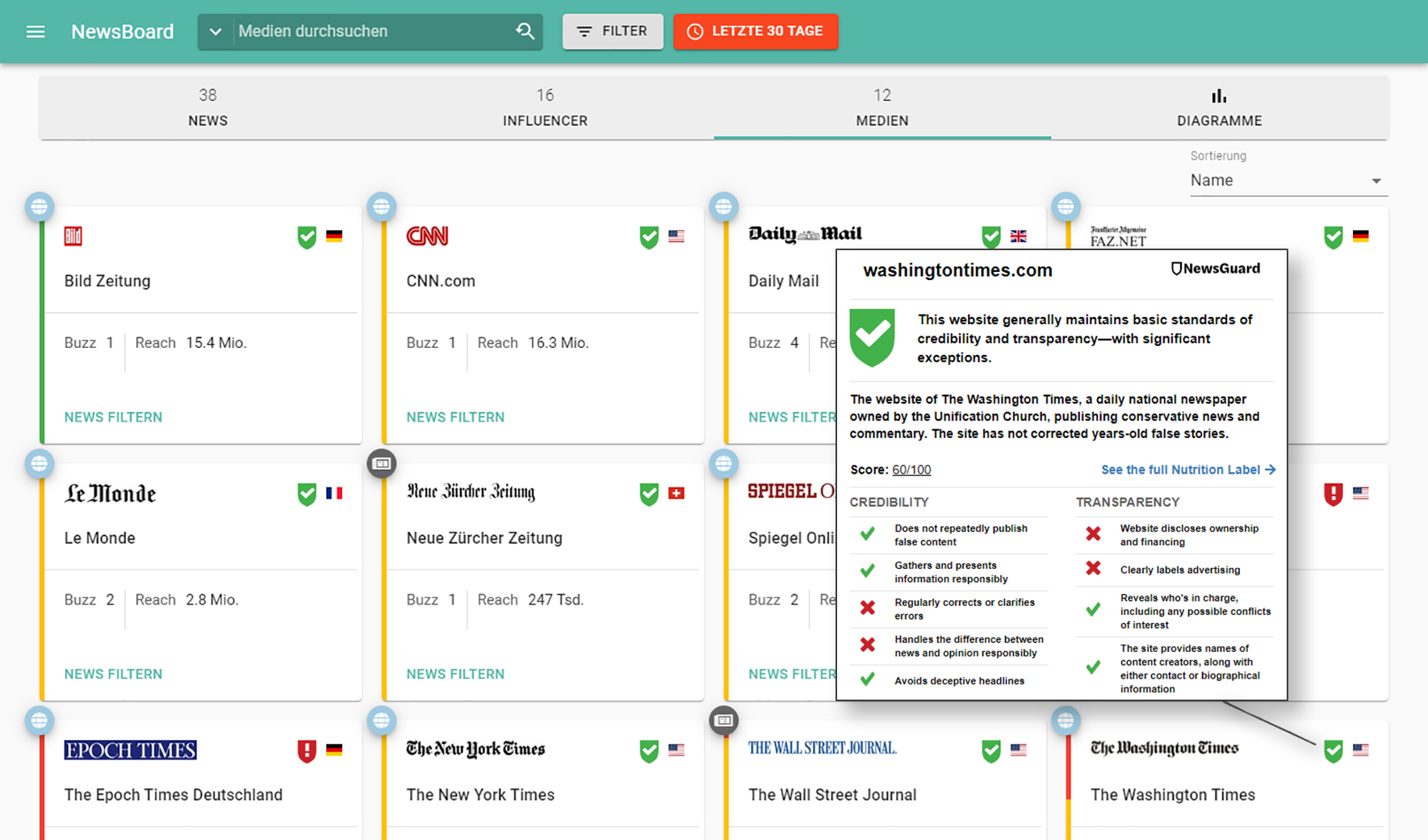

Jens Schmitz: It’s an exciting challenge for us. Our customers not only expect an overview of how their topics of interest are developing in the media. For strategic decisions in communications work, they also need classification and evaluation of the results. This also includes assessing the credibility of the sources and their content. This is particularly crucial for crisis prevention. If a company gets into a PR debacle or is associated with false information, this can cause serious damage to its reputation. We want to counteract this and alert customers at an early stage if questionable content is circulating about them, their products, or in connection with their core topics of interest.

IN JULY, PRESSRELATIONS, FRAUNHOFER FKIE, AND NEWSGUARD ANNOUNCED THAT THEY WOULD BE TAKING JOINT ACTION AGAINST FAKE NEWS. WHAT ADVANTAGES CAN CUSTOMERS AND USERS EXPECT FROM THIS COOPERATION?

Jens Schmitz: In the future, customers will gain a better overview of the credibility of the media sources that report on them, and will be able to better identify and counteract reputation risks through targeted fake news monitoring and alerting. In the analytical area, it will also be possible to identify patterns of how false information is spread. From this, in turn, certain rules can be derived for the future.

PROFESSOR SCHADE, TOGETHER WITH THE RESEARCHERS OF FRAUNHOFER FKIE YOU HAVE DEVELOPED A TOOL THAT ENABLES AUTOMATED ANALYSIS OF CONTENT AND PROVIDES INFORMATION ABOUT DELIBERATELY DISSEMINATED DISINFORMATION. THE WHOLE THING WORKS LIKE A KIND OF “SPAM FILTER”. WHEN DO YOUR ALGORITHMS SOUND THE ALARM?

Professor Dr. Ulrich Schade: To answer this question, I have to briefly outline how such programs generally work. First of all, you train two language models, one with examples of serious journalistic sources and one with examples of “fake” ones. This way the program is trained to recognize the linguistic differences between the two types of text by certain features. For example, the relationship between adjectives and nouns can be one such feature. If, for example, the “fake” sample texts have on average significantly more adjectives than the serious news texts and if a text to be evaluated then has many adjectives compared to the number of nouns, this is a single indication that the text could be “fake”.

For the evaluation, the tool uses many such characteristics, not only linguistic ones, but also those from metadata. Nevertheless, the quality of the tool depends on the text examples it has been trained with. If the examples are poorly chosen, the tool will not deliver good results.

DO YOU HAVE OTHER EXAMPLES OF HOW FAKE NEWS CAN BE RECOGNIZED?

Professor Dr. Ulrich Schade: We looked for Russian influence in the context of the 2017 federal elections in Germany. Some of the analyzed fake news were created by Russians who speak very good German, but are not native speakers. In Russian, for example, there is the peculiarity of replacing the “is” with a dash. This resulted in dashes in the wrong places in the corresponding texts. Orthography, sentence structure, but also the choice of words, e.g. the use of superlatives and “aggressive” adjectives, can be further indications of fake news. It always depends on the topic in question: If “corona” occurs in connection with “inoculations” and “microchips”, this would be an alarm signal, for example.

NOW THERE ARE MANY DIFFERENT TYPES OF FAKE NEWS – FREELY INVENTED AND THOSE THAT DELIBERATELY TWIST INDIVIDUAL FACTS – HOW DO YOUR MACHINES DEAL WITH THIS DIVERSITY?

Professor Dr. Ulrich Schade: As I said, the crucial thing is that when you train the classifier, you provide it with correct sample texts. Metadata is also evaluated, such as who posts when and how often. Usually bots are still involved in the background, forming a network that is then flooded with false information. The more often people hear something, the faster they believe it, and if many tell the same story, it is more likely to be believed to be true. We can recognize the structures of these networks via so-called metadata.

PRESSRELATIONS MONITORS ABOUT 2 MILLION NEWS SOURCES ACROSS ALL MEDIA CHANNELS ON A DAILY BASIS. THEY USE THE NEWS TO FURTHER DEVELOP THEIR PROGRAM. WHY ARE THESE LARGE AMOUNTS OF DATA IMPORTANT?

Professor Dr. Ulrich Schade: We need good language models for both serious news and “fake” ones. In order to be able to create them, we need many examples, but a large number of examples will only have a positive effect if this number also covers special cases. If a human being learns what a bird is, it is not enough to present examples of songbirds and birds of prey and as non-birds cats and monkeys. This does not provide a basis for the correct classification of bats, penguins, insects, and ostriches as “bird” or “no bird”.

DR. MEISSNER, IN THE ANALYSIS AND EVALUATION OF FAKE NEWS, YOU AS A JOURNALIST AND COMMUNICATION RESEARCHER, TOGETHER WITH YOUR COLLEAGUES FROM NEWSGUARD, START AT THE SOURCE. WHY?

Dr. Florian Meißner: Our focus is indeed the analysis of news and information websites. The refutation of individual false messages by fact checkers, the so-called “debunking”, is of course very important. There are many good organizations in this field that had their hands full during the corona crisis. However, there is a central problem with fact-checking: verification and correction usually only come when the false message has already spread virally and has had its effect. It is then almost impossible to reverse this effect. Because once a person gets a picture of a situation, it is difficult to correct it. That’s why we start at the other end and do “prebunking”. We make users aware from the outset if an information source is proven to be an unreliable medium.

HOW DOES A MEDIA CHECK WORK EXACTLY?

Dr. Florian Meißner: The editorial process behind it involves several loops. Each individual rating results from the participation of at least three NewsGuard journalists. We look at how a site has reported in recent years and apply various credibility and transparency criteria. Criteria that are also used in journalistic training, for example. The results of our ratings are shown to users via our red and green NewsGuard symbols at a glance; all details and the corresponding sources can then be read in our media profiles.

WHAT DOES A MEDIUM HAVE TO BE LIKE TO GET 100 POINTS?

Dr. Florian Meissner: We have a catalog of nine basic journalistic criteria, which are weighted according to their importance. For example, criteria for credibility – has a website repeatedly disseminated false information without correcting it? Is information gathered and presented responsibly or are quotes or facts taken out of context? Are there correction guidelines and are corrections actually published regularly? Are news and comments separated? Are there misleading headlines? There are also various criteria for transparency, like are ownership relationships disclosed? Is advertising marked as such? Are those responsible for editorial content named? And is there information about the authors?

HOW MANY MEDIA OUTLETS HAVE BEEN ANALYZED SO FAR?

Dr. Florian Meissner: Worldwide, there are already nearly 6.000, the majority of which are US media. We have also been active in Europe for a year and a half. In addition to Germany, we also analyze media sources in Great Britain, France, and Italy. In Germany, we have so far analyzed around 250 media outlets, but more are being added continuously.

HOW DO MEDIA OUTLETS REACT WHEN THEY RECEIVE A NEGATIVE RATING?

Dr. Florian Meissner: When ratings are bad, it is clear that you don’t always make friends. Many fact-checkers experience this in their daily work. Part of journalistic fairness is that if we come across problematic content, we give the website operators the opportunity to comment. Sometimes information on these websites then changed, supplemented, or corrected. In these cases, we of course update our ratings.

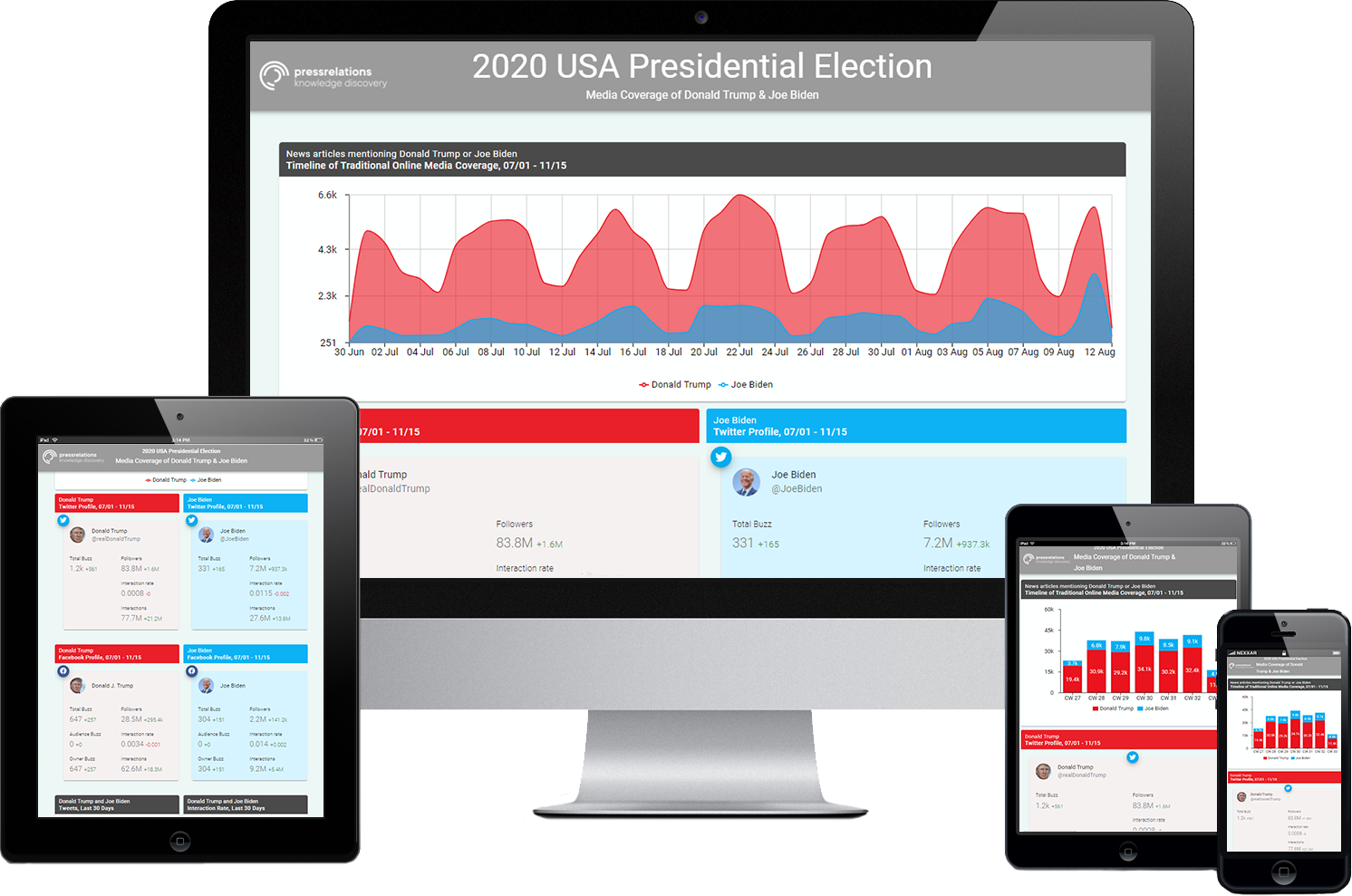

THE NEWLY DEVELOPED PROCEDURE IS USED FOR THE FIRST TIME FOR ANALYZING THE US PRESIDENTIAL ELECTION CAMPAIGN IN THE TIME OF COVID-19. HOW DID THE THREE PARTNERS COME TOGETHER FOR THIS?

Jens Schmitz: All in all, it is a matter of examining the credibility of the coverage of the U.S. election and looking at how high the proportion of disinformation is. Under the special conditions of the corona crisis, the current election campaign offers an exciting field of research.

We carry out the media analysis ourselves, but in doing so we draw on both the NewsGuard scores and the results of Fraunhofer FKIE. The first partial analysis, which has just been published, is a Twitter analysis which shows, among other things, that Biden tweets much less but has more interactions per tweet. The NewsGuard score shows that Biden shares content from much more credible sources than Trump.

MAN AND MACHINE WORK HAND IN HAND FOR THIS ANALYSIS. WHAT ARE THE ADVANTAGES?

Jens Schmitz: We have created a completely new approach. Until now, there have either been offerings that focus on content or those that are very much based on the source. The combination of the two offers the advantage of providing users with an even better overview of which media outlets and which information sources they can trust. Furthermore, sources with low credibility always have a higher reputation risk, which must be kept in mind.

Dr. Florian Meißner: From companies that are active in the field of ad management, we know that brand safety is a major issue in this context. Brand safety can also be taken further by finding out early on who is talking about a company and its issues on the net, how, and in what context. You shouldn’t wait until it’s too late to start.

Thank you very much for the interview!

Picture credits:

Cover picture: Quay Pilgrim via Unsplash.com

Portraits:

- Prof. Dr. Ulrich Schade: ©Fraunhofer FKIE

- Dr. Florian Meißner: ©Florian Meißner

- Jens Schmitz: ©pressrelations

NewsRadar®/Infoboard: ©pressrelations